|

SEO updates you need to know

📄 |

|

Google tests sticky citations in AI Overviews. Much like a sticky header or menu on a website, the first citation in the AI summary will remain on screen as the user scrolls, potentially increasing the value of AI visibility. |

😭 |

|

Google rolls out emojis in search results. This update shows emojis directly on the SERPs rather than sending users to emoji sites. RIP to sites like Emojipedia, which I consistently use for Core Updates. |

▶️ |

|

YouTube continues to dominate AI citations. YouTube is the most cited domain in AI Overviews and is 200x more visible than other video sites such as Vimeo or Twitch. 20% of all AI search platforms include YouTube citations. |

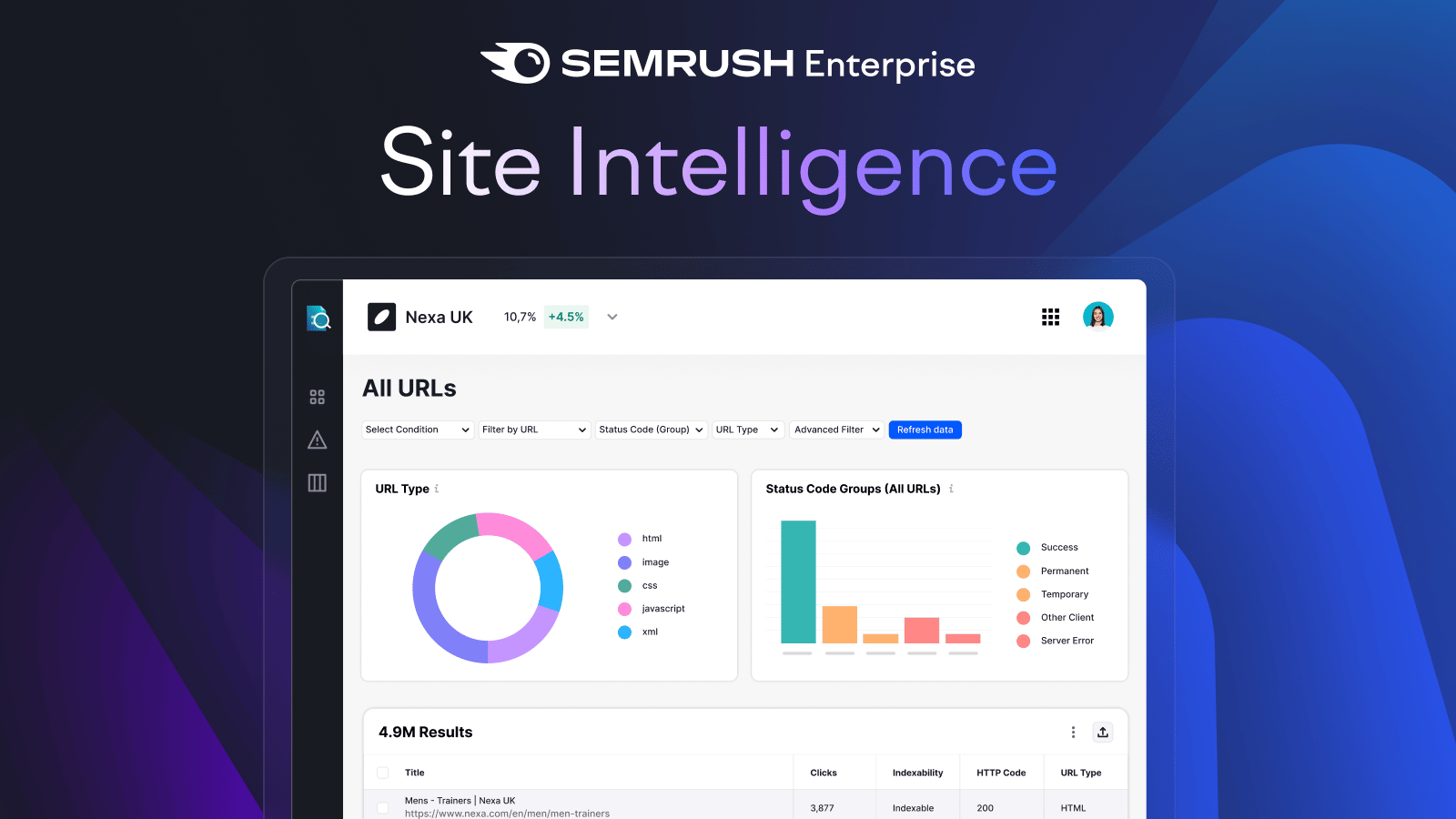

Sponsor: Semrush Enterprise

Site Intelligence helps teams build high-performing sites together.

Crawl millions of pages, enrich audits with search performance data, and prioritise technical fixes by actual ranking or revenue impact.

Because site health is non-negotiable.

Get a demo and start enhancing every corner of your site.

|

Search with Candour podcast

Web performance in the era of AI search

Season 4: Episode 39

Join hosts Jack Chambers-Ward and Mark Williams-Cook in this week's episode of Search with Candour as they discuss recent Google SERP changes and the impact of AI on keyword research.

Discover why the SEO community is buzzing about Google changing the num100 parameter and how these modifications affect impressions and rank tracking.

Additionally, the dynamic duo share conference experiences, and tease upcoming speaking engagements.

|

|

|

This week's solicited tips:

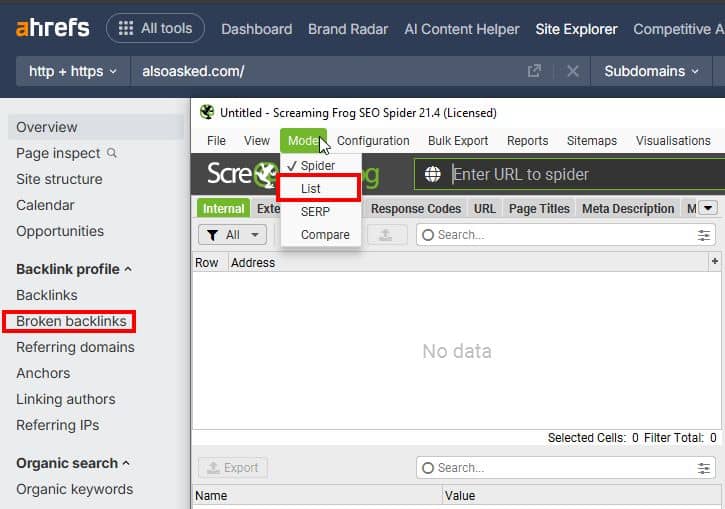

I see some broken backlinks, and all logic flies out the window.

I saved a client £1000s in our initial 30 minute discussion to see if we were a fit with a simple check that some people sleep on 🛌

Unbeknownst to the client who was just starting in the role, someone in their content team had just removed dozens of pages of content from a subdomain; but the kicker was, these pages had HUNDREDS of links from good websites which were now all 404ing. 😭

These are the exact type of link that companies spend £1000s on content and outreach to try and obtain. 💸

If you've got a tool like Ahrefs, they have a simple report in Backlink profile -> Broken backlinks which will list all external links to your domain that resolve in a 404.

If you can only acquire a list of incoming links, you can use a tool like Screaming Frog and use the "List" crawl mode (Mode -> List) and then paste the list of incoming links and filter to which 404.

For URLs that are broken with good links, either:

1) 301 redirect to URL to the new version of this page

or

2) If no appropriate redirect exists, I would consider creating a page explaining the situation and linking off to your other related key pages.

|

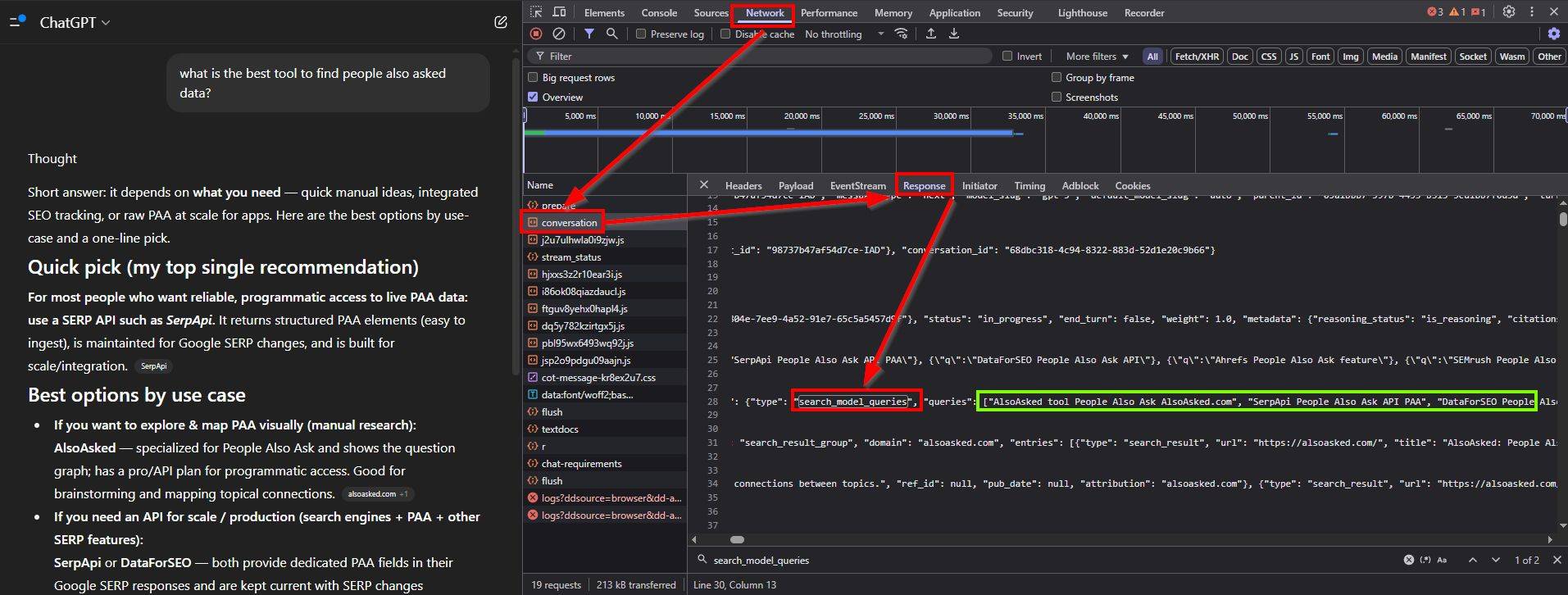

Why is no one grounding their queries? I specifically requested it.

Want to know how ChatGPT is grounding and what web search queries it is using?

That is still possible ⬇️

In a Chromium Browser:

- Press F12 to open developer tools

- Do your query in ChatGPT

- Click “Network”

- Scroll down and click on “conversation”

- Click on “Response”

- Search for “search_model_queries”

|

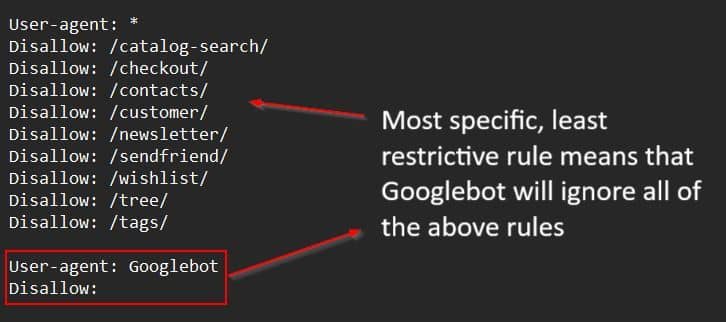

The robots.txt analysis, it's so beautiful.

Lots more talk about robots.txt with LLMs crawling so hard, but I see so many implementation mistakes caused by not understanding order of precedence 😵

🥇 Order of precedence means the most specific, least restrictive rule will be followed - this can be confusing!

📃 In this example, this website's robots file asks *all* user agents to not crawl the listed directories.

🟢 However, since they have then specified rules just for Googlebot, they've essentially said "Disallow nothing" for Googlebot

✅ Because this rule is more specific (it only applies to Googlebot) and is least restrictive (allows crawling), it means all of the above Disallow rules are ignored by Googlebot.

🔃 The order of the rules is irrelevant. So if you moved the Googlebot specific bit to the top, you would get the same outcome.

🔧 Dave Smart has a wonderful tool to test your robots.txt and you can even now install it as a MCP Server 🤓

|

Now you've done it. You've made me change my strategy.

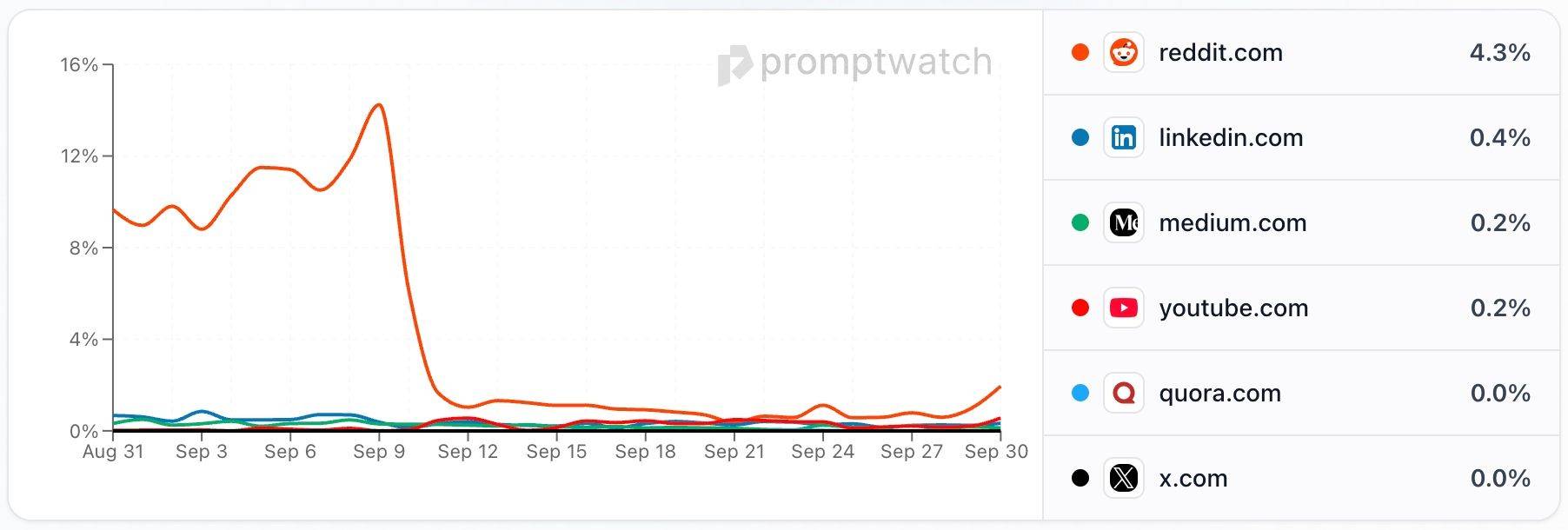

$5,000,000,000 wiped off Reddit’s market cap as ChatGPT citations free fall from 10% to 2% of responses 📉

As I have said multiple times the last few months: Don’t divert your precious marketing resources to chase the shiny thing. 💰

Your strategy should be aligned with the system goals you are optimising for, not where the algorithm currently is, or you will be doomed to be forever behind.🏃♂️

|

AI Overviews are garbage. Never love anything.

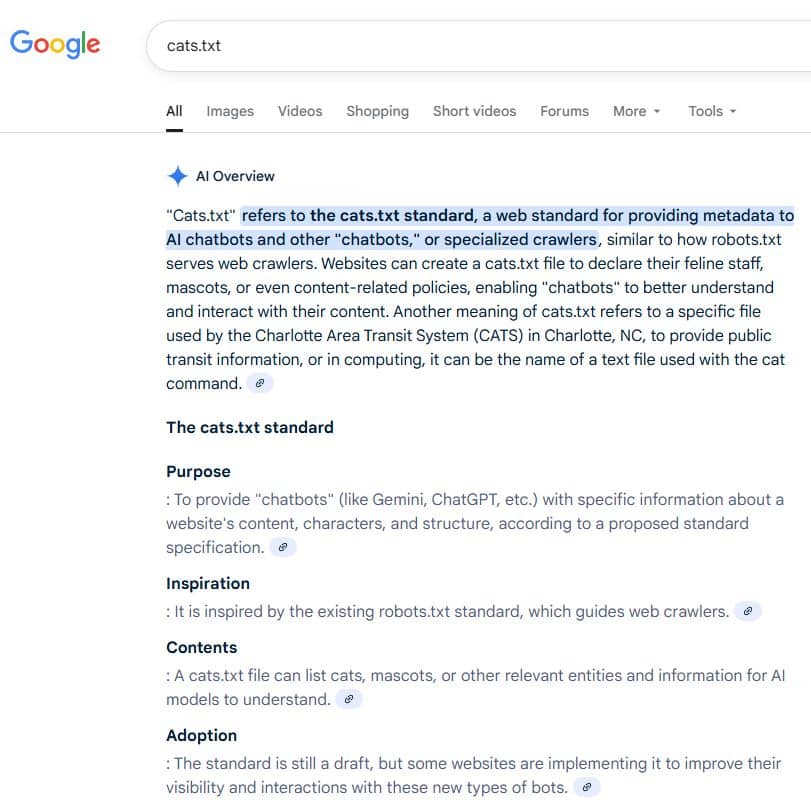

cats.txt is a new standard (according to Google's state of the art AI)! What this actually means is you ⚠️can't ask AI systems how they work⚠️

ChatGPT is not self-aware, it doesn't know how it functions, it doesn't know if it cares about llms.txt or not, it's predictive text and will mainly just be regurgitating whatever some randoms on the internet said. 🤮

The same applies to Google. It took almost no effort; a couple of blog posts, and now Google's state of the art AI is telling us that cats.txt is a "web standard for providing metadata to AI chatbots and other "chatbots," or specialized crawlers 🐈

Big shout out to Derek Hobson for finding this 😎

|

Refer subscribers and earn rewards!

|

|

|