|

SEO updates you need to know

🔍 |

|

Google AI Mode launches in the UK. After launching in the US and India, AI Mode launched in the UK on 28 July 2025. Users have reported a slow rollout but at the time of writing, it appears to be widely rolled out to most users. |

Sponsor: fatjoe

The secret to great PR coverage?

It’s great PR ideas. 💡

Clients - and even in-house teams - are craving PR coverage right now.

Powerful links, AI-influencing impact, classic PR wins.

It’s not surprising it’s so popular, but to secure the coverage you crave, you need the best ideas.

Journalists’ inboxes are more cluttered than ever before, so you have to stand out from the crowd.

Luckily, we’ve got a PR expert who has shared his proven ideation tactics.

|

Search with Candour podcast

The future of schema & structured data

Season 4: Episode 30

Jack Chambers-Ward is joined by Jarno van Driel to discuss the nuances of structured data, its significance in SEO, and its broader applications beyond search.

The conversation ranges from how schema works with AI and large language models to how structured data aids in internal communication, impacts e-commerce, and the evolving landscape of digital marketing tools.

|

|

|

This week's solicited tips:

Chunking isn't real. None of this is.

Have you heard about the hot new LLM optimisation technique on the block called "chunking"? Let's talk about it before you waste your time 🤠

❓What is the "chunking" LinkedIn randos are tell you to do?

The basic premise the majority of people share for "chunking" is that you should be writing things in formatted, bite-size paragraphs, covering each logical topic so it can easily be extracted by an LLM.

🤔 Wait! Breaking my content up with headers and into logical sections that cover specific topics and questions to make it scan readable is what I have been doing for years??

Yes. But that doesn't sound very fancy, does it?

🤖 What actually IS chunking in terms of how LLMs work?

LLMs have short-comings: Knowledge cut off, and context limitations. To get around these, they use Retrieval Augmented Generation (RAG), which means they:

1/ Split long documents into smaller chunks

2/ Store these chunks in a database

3/ When a query comes in, find the most relevant chunks

4/ Combine the query with these relevant chunks

5/ Feed this combined input to the LLM for processing

Where to "chunk" the document is a challenge; and there are a few different ways they can do this (see Chris Green's post).

One way is Semantic Splitting, which uses a "sliding window technique" and works like this:

1/ Start with a window that covers a portion of your document (e.g., 6 sentences).

2/ Divide this window into two halves.

3/ Generate embeddings (vector representations) for each half.

4/ Calculate the divergence between these embeddings.

5/ Move the window forward by one sentence and repeat steps 2-4.

6/ Continue this process until you've covered the entire document.

Peaks of divergence between embeddings give an indication of how different the topic is and where to slice it to make a "chunk".

🤷 Why does this mean that "chunking" as a tactic is silly?

If Semantic Splitting was used during RAG, it wouldn't matter if your content was a long wall of text, or nicely broken up into spaces paragraphs, the end result of the chunking would be the same. This is a smart method that works with the other 95% of the web that doesn't have people "optimising" it.

📈 What about [case study] that showed increased visibility when they rewrote their content to match the queries?

1/ RAG has a huge bias for *recency*, just the process of significant new updates will make you more likely to appear.

2/But yes! If you start explicitly writing out the question you want to rank, you will be more visible, but that isn't anything to do with "chunking". That is classic IR scoring simply playing its part. It worked on Google for many, many years.

🖊️ Do I need to change how I write?

Google's HCU recognised and penalised sites that abused a "Q&A format". I would think it is likely that LLMs will recognise spamming "chunking" in the future, too.

Focus on readability and cognitive accessibility. Myriam Jessier has good things to say here.

|

Every day SEO gets a little bit harder

Before you panic about AI Mode rolling out into the UK today, it's worth thinking about this quote from Google's Gary Illyes from #searchcentral last week:

🗨️ "Simply use normal SEO practices. You don't need GEO, LLMO or anything else."

✔️ Yes, users interactions will look different.

✔️ Yes, the shape of 'the funnel' has changed

✔️ Yes, we need to find new ways to measure

✔️ Yes, some things are more/less important (as always)

❌ No, it doesn't change the core of what you should be doing.

Focus on the user, value creation, long-term bets, don't pay for tricks and hacks 🤠

|

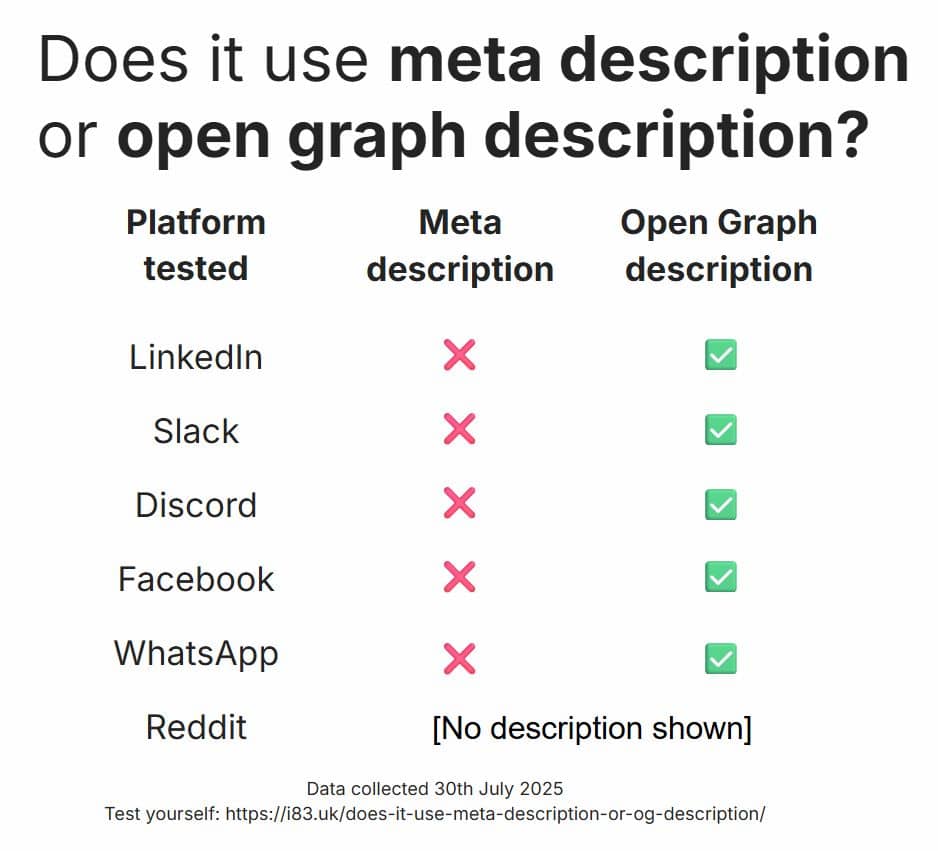

Life is just a series of unrelated wacky meta descriptions

You DO NOT need a meta description for your snippet to be shown correctly on social media sites or even messaging apps like Slack and WhatsApp.

Three weeks ago I posted data from three independent tests that indicated it was optimal to leave your meta description blank. Leaving the meta description blank encouraged search engines to generate one, based on the user's query, which resulted in higher CTR than crafting a single one yourself.

One of the most common comments on why you should not do this is "you need a meta description to show your snippet on [insert site]"

💀💀💀 This is just not true and is easily testable 💀💀💀

You can specify an og:description (Open Graph) separately to a meta description, and this is the description that every social media and messaging platform I have tested uses over the meta description.

I've included the URL so you can easily test this for yourself on your favourite platform.

Test, test, test 🫡

|

I'm responsible for my own SEO strategy?

Your SEO strategy should aiming at where search is going to be over the next 3-5 years, not reacting to what is happening now. This is SEO advice I posted 3 years ago ⤵️

"Unlinked mentions are important 😲 bear with me! ⬇️⬇️

❎ They're not going to be counted like links or pass PageRank

❎ They're not going to help you rank better in the SERPs

👣They will increase your digital footprint for the future

🤖 I strongly believe that we'll move to a more conversational/assistant-type search for many queries and away from 10 blue links"

AI search is happening, and I think this advice aged pretty well. I am currently thinking about Agent2Agent paradigms, looking at the standards discussions that are happening and what tech needs to be in place to make this happen for clients.

If you're managing a team, it's also important to give them headspace and time to think about these things, rather than firefighting. 🔥 🚒

|

When your requests are lost, it's important to use a VPN

If you're submitting a reconsideration request via GSC, which team it is routed to depends on your geographic location when you are logged in.... 🌍

Using a VPN to select another country will have your request routed elsewhere. Some teams are much faster than others 😏

|

Refer subscribers and earn rewards!

|

|

|